DataCrunch Blog

NEW Legacy

NVIDIA H200 vs H100: Key Differences for AI Workloads

NEW Legacy

NVIDIA GB200 NVL72 for AI Training and Inference

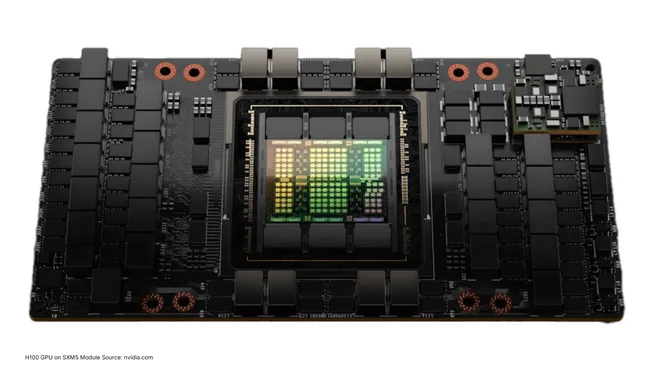

PCIe and SXM5 Comparison for NVIDIA H100 Tensor Core GPUs

Legacy

NVIDIA H200 – How 141GB HBMe and 4.8TB Memory Bandwidth Impact ML Performance

Legacy

NVIDIA Blackwell B100, B200 GPU Specs and Availability

Legacy

NVIDIA A100 GPU Specs, Price and Alternatives in 2024

Legacy

NVIDIA A100 PCIe vs SXM4 Comparison and Use Cases in 2024

Legacy

A100 vs V100 – Compare Specs, Performance and Price in 2024

Legacy

NVIDIA A100 40GB vs 80 GB GPU Comparison in 2024

Legacy

NVIDIA H100 GPU Specs and Price for ML Training and Inference

Legacy

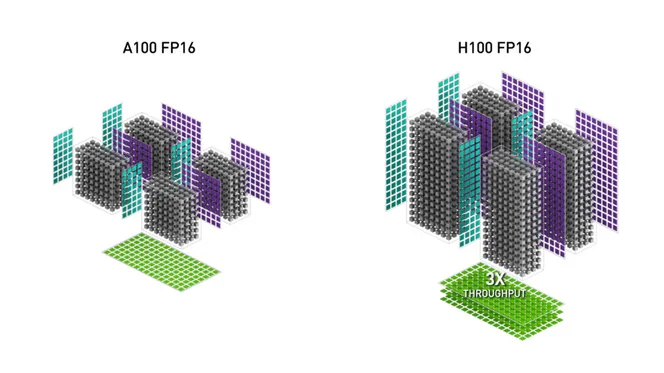

NVIDIA H100 vs A100 GPUs – Compare Price and Performance for AI Training and Inference

Legacy