NEW FEATURE

Dynamic pricing

Save up to 50% on GPU instances

This transparent pricing model adjusts daily based on market demand, providing a flexible and cost-effective alternative to fixed pricing for cloud GPU instances.

Users can optimize expenses by benefiting from lower prices during periods of low demand while enjoying predictable daily adjustments.

Pricing comparison

A100 SXM 80GB and 40GB instances

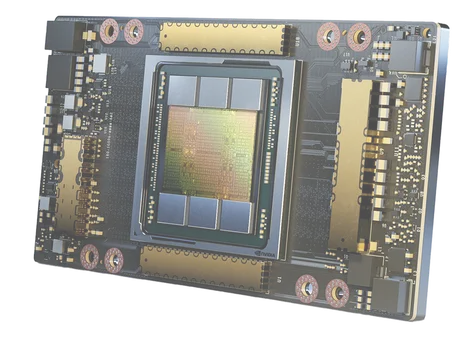

At the forefront of digital intelligence

Our servers exclusively use the SXM4 'for NVLINK' module, which offers a memory bandwidth of over 2TB/s and Up to 600GB/s P2P bandwidth.

A100 80GB reaches up to 1.3 TB of unified memory per node and delivers up to a 3X throughput increase over A100 40GB

80GB

40GB

80GB vs 40GB

Push the limits of compute| GPU Model | A100 SXM4 80GB | A100 SXM4 40GB |

|---|---|---|

| Memory type | HBM2e | HBM2 |

| Memory Clock speed | 3.2 GB/s | 2.4 GB/s |

| Memory Bandwidth | 2.039 GB/s | 1,555 GB/s |

| GPU Model | Memory type | Memory Clock speed | Memory Bandwidth |

|---|---|---|---|

| A100 SXM4 80GB | HBM2e | 3.2 GB/s | 2.039 GB/s |

| A100 SXM4 40GB | HBM2 | 2.4 GB/s | 1,555 GB/s |

A100 virtual dedicated servers are powered by:

Up to 8 NVIDIA® A100 80GB GPUs, each containing 6912 CUDA cores and 432 Tensor Cores.

We only use the SXM4 'for NVLINK' module, which offers a memory bandwidth of over 2TB/s and Up to 600GB/s P2P bandwidth.

Second generation AMD EPYC Rome, up to 192 threads with a boost clock of 3.3GHz.

The name 8A100.176V is composed as follows: 8x RTX A100, 176 CPU core threads & virtualized.

| Instance type | GPU model | GPU | CPU | RAM | VRAM | P2P | On demand price | Dynamic price | Spot price |

|---|---|---|---|---|---|---|---|---|---|

| 1A100.40S.22V | A100 SXM4 40GB | 1 | 22 | 120 | 40 | / | $0.72/h | $0.85/h | $0.25/h |

| 8A100.40S.176V | A100 SXM4 40GB | 8 | 176 | 960 | 320 | 600 GB/s | $5.77/h | $6.77/h | $2.03/h |

| 1A100.22V | A100 SXM4 80GB | 1 | 22 | 120 | 80 | / | $1.16/h | $1.26/h | $0.63/h |

| 2A100.44V | A100 SXM4 80GB | 2 | 44 | 240 | 160 | 100 GB/s | $2.32/h | $2.52/h | $1.26/h |

| 4A100.88V | A100 SXM4 80GB | 4 | 88 | 480 | 320 | 300 GB/s | $4.64/h | $5.04/h | $2.52/h |

| 8A100.176V | A100 SXM4 80GB | 8 | 176 | 960 | 640 | 600 GB/s | $9.28/h | $10.09/h | $5.04/h |